AWS-BASED GIT PIPELINES

Abstract: AWS Developer Tools provide a strong foundation for building CI/CD solutions. This article shows how to add automatic creation/starting and deletion of pipelines on create branch/push and delete branch operations in a CodeCommit source repository. The source code for the solution is included.

Introduction

Releasing of SVN pipelines, described in my previous article, had a huge success. This inspired us to create a similar automation solution for projects, migrated from SVN to Git-based AWS CodeCommit repositories.

Note: This article is describing a solution for building CI pipelines in AWS and assumes that you have experience in AWS Developer Tools, bash scripting, Terraform, Docker, Linux, and have a good understanding of the application build process. I have another article AWS CodePipeline-based CI Solution for Projects, Hosted Anywhere, Using SVN as an Example, which describes the earlier solution. In this article, I have used some of the same information so that there will be no need to visit the previous article if you are interested only in Git pipelines.

I’ll begin with how Git pipelines work first, and then I’ll cover automation.

How Git Pipelines Work

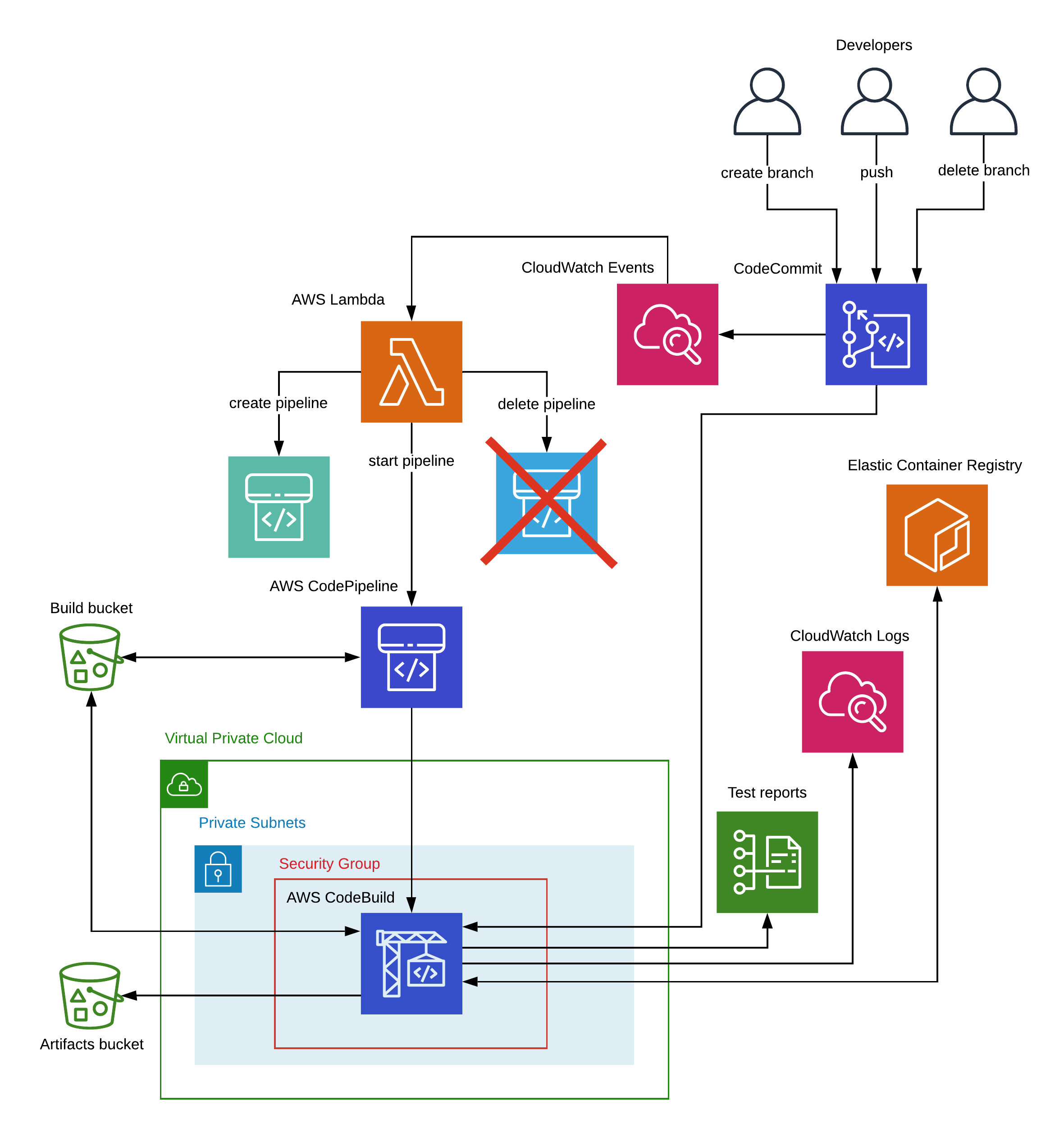

For each project/branch pair, a new instance of an AWS CodePipeline will be automatically created. This will reuse common resources, such as IAM roles, security groups, etc. Each pipeline will consist of 2 actions: source and build. Let me describe how each stage works.

Pipeline Source Stage

For the source action, we use Amazon S3 as a provider:

{

"name": "Source",

"actions": [

{

"name": "Source",

"actionTypeId": {

"category": "Source",

"owner": "AWS",

"provider": "S3",

"version": "1"

},

"configuration": {

"S3Bucket": "[[[build_bucket_name]]]",

"S3ObjectKey": "git_pipelines/build_scripts.zip"

},

"outputArtifacts": [

{

"name": "source_output"

}

],

"runOrder": 1,

"inputArtifacts": []

}

]

}

During the source stage, the pipeline downloads build_scripts.zip from our Amazon S3 build bucket, and passes its contents to the build stage.

Pipeline Build Stage

We use AWS CodeBuild for the pipeline build stage. Only one build stage of a specific pipeline can be run at a particular moment in time.

Note: This makes perfect sense, as each pipeline should represent a specific branch in a specific project. You could think of Jenkins pipeline settings, where you specify the project and branch to build. Sometimes it is more complicated, and there are so-called superseded executions.

The build state ‘lives’ in pipelines, not in the AWS CodeBuild project. This allows us to avoid creating a new project for each new pipeline and reuse the same AWS CodeBuild project for all pipelines! In this configuration, we preserve the ability to build different Git projects as well as its developer branches in parallel.

In our case, we should somehow “notify” the AWS CodeBuild project which Git project and branch to checkout. This is where REPOSITORY_NAME and BRANCH_NAME come into play. These are supplied by the pipeline, which stores them in the EnvironmentVariables block of its build stage configuration:

"configuration": {

"EnvironmentVariables": [

{

"name": "REPOSITORY_NAME",

"type": "PLAINTEXT",

"value": "myRepositoryName"

},

{

"name": "BRANCH_NAME",

"type": "PLAINTEXT",

"value": "myFeatureBranch"

}

],

"ProjectName": "git-codebuild-project"

}

When the AWS CodeBuild project starts, it follows instructions specified in buildspec.yml, which is provided by the pipeline source action.

In buildspec.yml we invoke get_source.sh, which runs git to checkout code from the CodeCommit repository:

git clone --single-branch --branch $BRANCH_NAME https://git-codecommit.ca-central-1.amazonaws.com/v1/repos/${REPOSITORY_NAME}

Each Git project has a build.sh script, which contains build commands, specific to project. After checking out the source code, buildspec.yml invokes the build.sh script to start the build process.

Git Pipelines Automation

You can say: “That’s great, but what about automation?”. And this is a good question, as creating pipelines manually is not developer-friendly. The good news is that we can have automation as well! I have added the following automation to CodePipeline pipelines:

- When a developer creates a new remote branch in a CodeCommit repository, a new pipeline is created.

- When a developer pushes a change to a branch, it’s pipeline is started.

- When a developer finishes working on a feature branch, merges their pull request and deletes the branch - then the pipeline is deleted.

Note: By default, a feature branch is deleted on merging pull request to master in the AWS CodeCommit console.

We setup an AWS CloudWatch Event Rule to listen for changes of state in CodeCommit repositories, which trigger an AWS Lambda function, which in turn creates/starts/deletes pipelines:

switch(eventType) {

case "referenceCreated":

createPipeline(repositoryName, referenceName, pipelineName);

break;

case "referenceUpdated":

startPipeline(pipelineName);

break;

case "referenceDeleted":

deletePipeline(pipelineName);

break;

default:

console.log("Skipping event: " + eventType);

}

The createPipeline function in Lambda function handler contains the template for the new pipeline, which is populated with specific values of REPOSITORY_NAME and BRANCH_NAME:

function createPipeline(repositoryName, referenceName, pipelineName) {

var environmentVariables = [

{

name: 'REPOSITORY_NAME',

type: 'PLAINTEXT',

value: repositoryName

}, {

name: 'BRANCH_NAME',

type: 'PLAINTEXT',

value: referenceName

}

];

var params = {

pipeline: {

name: pipelineName,

roleArn: 'arn:aws:iam::' + ACCOUNT_ID + ':role/git-codepipeline-role',

stages: [

{

name: 'Source',

actions: [

{

name: 'Source',

actionTypeId: {

category: 'Source',

owner: 'AWS',

provider: 'S3',

version: '1'

},

runOrder: '1',

configuration: {

'PollForSourceChanges': 'false',

'S3Bucket': BUILD_BUCKET,

'S3ObjectKey': 'git_pipelines/build_scripts.zip'

},

outputArtifacts: [

{

name: 'source_output'

}

],

inputArtifacts: []

}

]

},

{

name: 'Build',

actions: [

{

name: 'Build',

actionTypeId: {

category: 'Build',

owner: 'AWS',

provider: 'CodeBuild',

version: '1'

},

runOrder: '1',

configuration: {

'EnvironmentVariables': JSON.stringify(environmentVariables),

'ProjectName': 'git-codebuild-project'

},

outputArtifacts: [],

inputArtifacts: [ {

name: 'source_output'

}

]

}

]

}

],

artifactStore: {

type: 'S3',

location: BUILD_BUCKET

}

}

};

CODEPIPELINE.createPipeline(params, function(err, data) {

console.log(err

? "cannot create pipeline <" + pipelineName + ">. Reason: " + err

: "successfully created <" + pipelineName + ">"

);

});

}

In the case when we push a new branch to a CodeCommit repository, an AWS CloudWatch Event Rule invokes our Lambda function, which creates a new pipeline for us.

More on Project Build Using a Real-world Scenario

Now that we have discussed how pipelines work and how to automate them, let’s move on to the question of how they can be used to build real-world applications.

For our builds, we are using a custom Docker image, which is published to our Amazon ECR repository.

Note: you can build this image by yourself and add to it all of the tools which are needed to build your projects. Sorry, but preparing an image like this is beyond the scope of this article and is not covered here. You can use one of the AWS CodeBuild standard images with slight modifications to the code in this article, but for better customization and to speed-up the build process, it is preferable to use a custom image. In this article, I will show how you how to do that.

Let’s assume that we are going to build a Java web application using Gradle and run Selenium web tests. Let’s consider how to make this work in more detail.

AWS CodeBuild pulls our custom Docker image from the Amazon ECR, uses it to start a Docker container, and executes commands from the buildspec.yml. This starts a Docker daemon, checks out our project’s source code from Git using environment variables and runs the build.sh script. Our projects have different structures (some of them are just simple libraries, others are complex web applications with several Gradle subprojects), and thus require custom build.sh scripts. We store these scripts in the root directory of each project. Our Gradle build scripts perform the build, run unit tests, pull Docker images for the database server and headless Chrome from Docker repositories, then start all of the Docker containers and run the Selenium web tests.

After the build finishes, we save the build reports to the Amazon S3 build bucket, collect all of the test results in the ./test-results folder for the AWS CodeBuild Test Reports feature and then publish new Docker images with our web application to the Amazon ECR repository (see Git pipelines architecture diagram below). If we are building Angular applications, we can zip and upload artifacts to our artifacts Amazon S3 bucket for our deployment pipelines.

If the build fails, you can look in the following places to find the reasons why this happened:

- Build console output in CloudWatch logs;

- Test reports in AWS CodeBuild Console;

- Reports in Amazon S3 bucket (in case of the static code analysis tool fails the build).

The next section is dedicated to showing you typical examples of commands, which we use in our projects.

Commands Which We Use In Our build.sh

-

Build the Gradle Java project:

./gradlew build ret=$? -

Save build reports to the Amazon S3 bucket:

zip -r -q reports.zip ./build/reports aws s3 cp reports.zip s3://[[[your_build_bucket_name]]]/reports/[[[your_project_name]]]/"${BRANCH_AND_REVISION}.zip" -

Prepare test results for the AWS CodeBuild reports feature:

mkdir -p $CODEBUILD_SRC_DIR/test-results/test cp ./build/test-results/test/*.xml $CODEBUILD_SRC_DIR/test-results/test mkdir -p $CODEBUILD_SRC_DIR/test-results/testbatch cp ./app1-batch/build/test-results/test/*.xml $CODEBUILD_SRC_DIR/test-results/testbatch mkdir -p $CODEBUILD_SRC_DIR/test-results/testclient cp ./app1-client/build/test-results/test/*.xml $CODEBUILD_SRC_DIR/test-results/testclient -

Publish Docker images for web and batch applications, created during the build process:

if [ $ret -eq 0 ]; then `$(aws ecr get-login --no-include-email)` docker tag app1:latest $AWS_ACCOUNT_ID.dkr.ecr.ca-central-1.amazonaws.com/app1:$BRANCH_AND_REVISION docker push $AWS_ACCOUNT_ID.dkr.ecr.ca-central-1.amazonaws.com/app1:$BRANCH_AND_REVISION docker tag app1-batch:latest $AWS_ACCOUNT_ID.dkr.ecr.ca-central-1.amazonaws.com/app1-batch:$BRANCH_AND_REVISION docker push $AWS_ACCOUNT_ID.dkr.ecr.ca-central-1.amazonaws.com/app1-batch:$BRANCH_AND_REVISION fi exit $ret

Monorepo

We have many projects, all of which have been moved to a monorepo. I.e. we have one repository for many projects. When we commit and push our changes to the CodeCommit repository, we want to rebuild only projects in which source files have been changed. For example, if we have 3 projects in the monorepo:

/project1/build.sh

/project1/src/main/java/com/example/MyApplication1.java

/project2/build.sh

/project2/src/main/java/com/example/MyApplication2.java

/project3/build.sh

/project3/Dockerfile

and we change /project1/src/main/java/com/example/MyApplication1.java and /project3/Dockerfile, we would like to rebuild only project1 and project3, but not project2. For this purpose I wrote builds_runner.sh, which analyzes the changeset and runs only projects with changes:

#!/bin/bash

CHANGES=($(git diff --name-only HEAD^1 HEAD))

# remove filenames from paths

SRC_DIR=`pwd`

for i in "${!CHANGES[@]}"; do

DIRNAME=`dirname "${CHANGES[$i]}"`

CHANGES[$i]="$SRC_DIR/$DIRNAME"

done

# leave only unique dirs, which exist: if N files changed in the same dir we want to search for build.sh in this directory only once

UNIQUE_FOLDERS=()

for i in "${!CHANGES[@]}"; do

CURRENT_PATH="${CHANGES[$i]}"

if [ -d $CURRENT_PATH ]; then

is_same=false

for j in "${!UNIQUE_FOLDERS[@]}"; do

if [[ $CURRENT_PATH == "${UNIQUE_FOLDERS[$j]}" ]]; then

is_same=true

break

fi

done

if [ $is_same = false ] ; then

UNIQUE_FOLDERS=("${UNIQUE_FOLDERS[@]}" $CURRENT_PATH)

fi

fi

done

# Search for build.sh in current folder and its parent folders until we find it.

BUILD_SCRIPTS_PATHS=()

for i in "${!UNIQUE_FOLDERS[@]}"; do

path=${UNIQUE_FOLDERS[$i]}

echo "--"

while :

do

BUILD_SCRIPT_PATH=`find "$path" -maxdepth 1 -mindepth 1 -name build.sh`

if [[ -n $BUILD_SCRIPT_PATH ]]

then

BUILD_SCRIPTS_PATHS=("${BUILD_SCRIPTS_PATHS[@]}" "${BUILD_SCRIPT_PATH}")

break;

fi

if [[ $path -ef $SRC_DIR ]]

then

break;

fi

path="$(realpath -s "$path"/..)"

done

done

# leave only unique build scripts: if different folders will produce the same build script on previous step, we want to run script only once

UNIQUE_BUILD_SCRIPTS_PATHS=()

for i in "${!BUILD_SCRIPTS_PATHS[@]}"; do

is_same=false

for j in "${!UNIQUE_BUILD_SCRIPTS_PATHS[@]}"; do

if [[ "${BUILD_SCRIPTS_PATHS[$i]}" == "${UNIQUE_BUILD_SCRIPTS_PATHS[$j]}" ]]; then

is_same=true

break

fi

done

if [ $is_same = false ] ; then

UNIQUE_BUILD_SCRIPTS_PATHS=("${UNIQUE_BUILD_SCRIPTS_PATHS[@]}" "${BUILD_SCRIPTS_PATHS[$i]}")

fi

done

# run all found build scripts

for i in "${!UNIQUE_BUILD_SCRIPTS_PATHS[@]}"; do

BUILD_SCRIPT_PATH=${UNIQUE_BUILD_SCRIPTS_PATHS[$i]}

echo "Executing <$BUILD_SCRIPT_PATH>:"

DIRNAME=`dirname "${BUILD_SCRIPT_PATH}"`

cd $DIRNAME

bash ./build.sh

done

This bash script runs after CodeBuild checks out code from the git repository (see buildspec.yml):

build:

commands:

- source get_source.sh

- cp builds_runner.sh $REPOSITORY_NAME && cd $REPOSITORY_NAME && bash builds_runner.sh

Deployment of Git Pipelines

Now that we have discussed how Git pipelines work, I’d like to continue with describing the process of deploying them.

IaC

We use the Terraform Infrastructure as Code (IaC) tool for deploying our infrastructure. To create an AWS CodePipeline with the settings discussed above, we use the following code:

resource "aws_codepipeline" "pipelines" {

for_each = local.pipelines

name = "git-${each.key}-${local.branch}"

role_arn = aws_iam_role.codepipeline.arn

stage {

name = "Source"

action {

name = "Source"

category = "Source"

owner = "AWS"

provider = "S3"

version = "1"

output_artifacts = ["source_output"]

configuration = {

S3Bucket = local.build_bucket

S3ObjectKey = local.path_to_build_scripts,

PollForSourceChanges = false

}

}

}

stage {

name = "Build"

action {

name = "Build"

category = "Build"

owner = "AWS"

provider = "CodeBuild"

input_artifacts = ["source_output"]

version = "1"

configuration = {

ProjectName = local.codebuild_project_name

EnvironmentVariables = jsonencode([

{

name = "REPOSITORY_NAME"

value = each.key

type = "PLAINTEXT"

},

{

name = "BRANCH_NAME"

value = local.branch

type = "PLAINTEXT"

}

])

}

}

}

artifact_store {

type = "S3"

location = local.build_bucket

}

}

For creating our AWS CodeBuild project we use the code below:

resource "aws_codebuild_project" "project" {

name = local.codebuild_project_name

service_role = aws_iam_role.codebuild.arn

build_timeout = 30

source {

type = "CODEPIPELINE"

buildspec = "buildspec.yml"

}

environment {

type = "LINUX_CONTAINER"

image = "${local.ecr_repository_url}:latest"

compute_type = "BUILD_GENERAL1_SMALL"

privileged_mode = true

image_pull_credentials_type = "SERVICE_ROLE"

}

vpc_config {

vpc_id = local.vpc_id

subnets = local.subnet_ids

security_group_ids = [aws_security_group.codebuild.id]

}

artifacts {

type = "CODEPIPELINE"

}

cache {

type = "LOCAL"

modes = ["LOCAL_DOCKER_LAYER_CACHE"]

}

}

I would like to mention here that our Terraform script creates all other resources, which are shared by all pipelines. For example: IAM roles and policies, the security group, as well as a Lambda function to automate creating/starting/deleting of Git pipelines.

Deployment Instructions

Note: I use ca-central-1 AWS region in the pipeline code. Please change it to your desired region.

Our git-pipeline module needs some resources to exist for its deployment and work: Vpc, private subnets, build and artifacts Amazon S3 buckets, Amazon ECR repository, VPC Endpoints for Amazon S3 and CloudWatch Logs (for transferring your artifacts and build logs over AWS private networks, not through public Internet). If you are already using AWS Developer Tools I bet you already have and use these resources in your AWS account. In this case please modify /tf/ci/git-pipeline module to use your resources. Otherwise, you can use the sample code from my previous article.

Note: All modules are examples only, and are created to show the working concept. For production deployments, the code should be modified to add security and other features. For example: enable versioning, encryption and lifecycle policies for S3 buckets; add fine-grained ingress/egress rules for Security groups; secure access to VPC Endpoints, etc.

There is nothing special in deploying Git pipelines:

- Modify constants and references to

terraform_remote_statein its Terraform module, as well as inindex.jsfromlambda_handler.zipand run Terraform to provision the pipelines in AWS. - Build and upload your custom Docker build image to your Amazon ECR build repository.

- Prepare a

build.shscript, create a branch of your project which you setup to work with Git pipelines on the first step and commit yourbuild.shscript to your branch. It should automatically create a new pipeline for your branch and start the build. You should be able to see it in the AWS console. If for some reason it doesn’t work please check the logs of your Lambda function.

Conclusion

In this article we discussed the Git pipelines architecture, adding CI automation, pipelines infrastructure provisioning and writing build scripts for your projects. Using the proposed approach and provided code, you can build your own CI solution.

I hope you enjoyed this article and that you’ll find it useful for building your own CI solutions for your projects.

Happy coding!

Disclaimer: Code and article content provided ‘as-is’, without any express or implied warranty. In no event will the authors be held liable for any damages arising from the use of code or article content.

You can find sample sources for building this solution here.