AWS FARGATE-BASED SOLUTION FOR RUNNING BATCH, WEB AND L4 APPLICATIONS

Abstract: To run containerized applications in AWS Fargate, many resources need to be created. Depending on the requirements, different types of applications (batch, web and L4 applications, as well as services) might need to be run in ECS clusters, which further complicates the solution. In addition to that, you might want to reuse load balancers and other resources in your staging AWS account to give every development team it’s own ECS cluster. Adapting the solution from this article will allow you to get the benefits of using AWS Fargate faster and easier.

Introduction

Note: This article assumes that you have solid experience in AWS Fargate and Terraform.

AWS Fargate is a serverless compute engine for running containerized applications. It is a managed service, which eliminates the need to administer and patch virtual servers. You can use to run different types of applications, such as:

- web applications;

- L4 applications;

- batch applications;

- services.

These applications are using common resources. The solution in this article has been developed with the following design considerations in mind:

- to achieve maximum code reuse;

- to avoid the creation of resources conditionally, which complicates logic;

- to allow each application type to live in its own module, which opens an opportunity for easier customization of modules.

This resulted in the following module composition:

batch

└ template

service

└ template

service-lb

└ template

service-alb

└ service-lb

service-nlb

└ service-lb

template

Let me describe how each Terraform module works.

How Modules Work

template Module

The template module is used by all other modules and contains common resources. It creates such resources as:

- ECS task definition, which is used to provide ECS with all task configuration parameters;

- CloudWatch log group for application to send logs to;

- Fargate execution role and task role with all required permissions to start and run a task;

- Security group.

Note: AWS SSM Parameter Store is good at storing application parameters and secrets, and I have added permissions for accessing it by default to all application types.

batch Module

This module is used to run batch applications, i.e. applications which:

- we can run on schedule, or on-demand by starting them manually if needed;

- exit after they will finish processing;

- does not expose any ports, but can interact with other applications over a network, database, etc.

We are not using an ECS service for this purpose, as it will launch a new instance of the batch application after the previous one will exit. Instead, we are starting an ECS task in a cluster.

This module creates resources for scheduling a batch application execution using a CloudWatch event rule and calls the template module to create common resources.

service Module

A service module is used to provision applications that are needed to run all the time. These applications do not expose any ports and can interact with other applications over a network, database, etc.

In this module, we create an ECS service and call the template module.

service-lb Module

This module creates common resources for both web and L4 applications:

- ECS service, which registers itself on load balancer;

- common resources, defined in the

templatemodule.

service-alb Module

Module for creating web services, which registers on ALB (Application Load Balancer). Creates the following resources:

- ALB listener rule;

- Security Group for the web service;

- ALB target group;

- common resources by calling

service_lbmodule.

service-nlb Module

A module for creating services, which needs to listen on TCP/UDP ports and registers on NLB (Network Load Balancer). Creates the following resources:

- NLB listener;

- NLB target group;

- common resources by calling

service_lbmodule.

How to Use Modules in Different AWS Accounts

Now that we have discussed the module structure, let’s move on to the topic of how to use them. Imagine that we have prod and staging AWS accounts. In this case, we can set up the following module structure in each account:

├──modules

└──{AWS_ACCOUNT_NAME}/{AWS_REGION}/{VPC_NAME}/apps

├── alb-internal

├── nlb-internal

├── cluster1

│ ├── cluster

│ ├── batchapp1

│ ├── service1

│ ├── tcpapp1

│ └── webapp1

├── cluster2

│ ├── cluster

│ ├── batchapp1

│ ├── service1

│ ├── tcpapp1

│ └── webapp1

...

Provisioning the same applications in several ECS clusters in the same AWS account allows us to achieve the following benefits:

- improved development and testing experience for developer teams by giving them the ability to use a dedicated cluster in the staging AWS account;

- provides the ability to set up Blue/Green deployment in the prod AWS account;

- saves costs by reusing common resources across several clusters.

Note:

cluster1.tcpapp1andcluster2.tcpapp1should work on different ports, as they use the same NLB.

Notes on Reusing ALB in Several Clusters

Let’s say we have 3 web applications, deployed in 2 clusters:

├── cluster1

│ ├── cluster

│ ├── webapp1

│ ├── webapp2

│ └── webapp3

├── cluster2

│ ├── cluster

│ ├── webapp1

│ ├── webapp2

│ └── webapp3

Webapps will use the following paths on ALB:

https://cluster1.staging.example.com/webapp1

https://cluster1.staging.example.com/webapp2

https://cluster1.staging.example.com (default website)

https://cluster2.staging.example.com/webapp1

https://cluster2.staging.example.com/webapp2

https://cluster2.staging.example.com (default website)

Note: my modules do not include code to provision SSL certificates or to set up DNS records.

To make this work, I introduced an alb_listener_priority_base parameter in the cluster module, and an alb_listener_priority_offset parameter for every web service. I increment the alb_listener_priority_base by 100 for each next ECS cluster, and specify a unique alb_listener_priority_offset for each web application in the cluster. I use these parameters in the service-alb module in the ALB listener rule resource:

resource "aws_alb_listener_rule" "paths_to_route_to_this_service" {

priority = data.terraform_remote_state.cluster.outputs.alb_listener_priority_base + var.alb_listener_priority_offset

listener_arn = local.load_balancer.listener_arn

action {

type = "forward"

target_group_arn = aws_lb_target_group.fargate_service.arn

}

condition {

path_pattern {

values = ["/${var.service_name}*"]

}

}

condition {

host_header {

values = ["${var.cluster_name}.${var.env_name}.example.com"]

}

}

}

If we use the following values for alb_listener_priority_base and alb_listener_priority_offset parameters

cluster1.alb_listener_priority_base = 1

webapp1.alb_listener_priority_offset = 0

webapp2.alb_listener_priority_offset = 1

webapp3.alb_listener_priority_offset = 98

cluster1.alb_listener_priority_base = 100

webapp1.alb_listener_priority_offset = 0

webapp2.alb_listener_priority_offset = 1

webapp3.alb_listener_priority_offset = 98

we will have the following priorities:

https://cluster1.staging.example.com/webapp1: 1

https://cluster1.staging.example.com/webapp2: 2

https://cluster1.staging.example.com (default website): 99

https://cluster2.staging.example.com/webapp1: 100

https://cluster2.staging.example.com/webapp2: 101

https://cluster2.staging.example.com (default website): 199

When a web request comes to the ALB, its listener rules are evaluated in priority order, which means that we can easily provision another web application in cluster1 without affecting applications in other clusters:

https://cluster1.staging.example.com/webapp1: 1

https://cluster1.staging.example.com/webapp2: 2

https://cluster1.staging.example.com/webapp4: 3

https://cluster1.staging.example.com (default website): 99

External and Internal Load Balancers

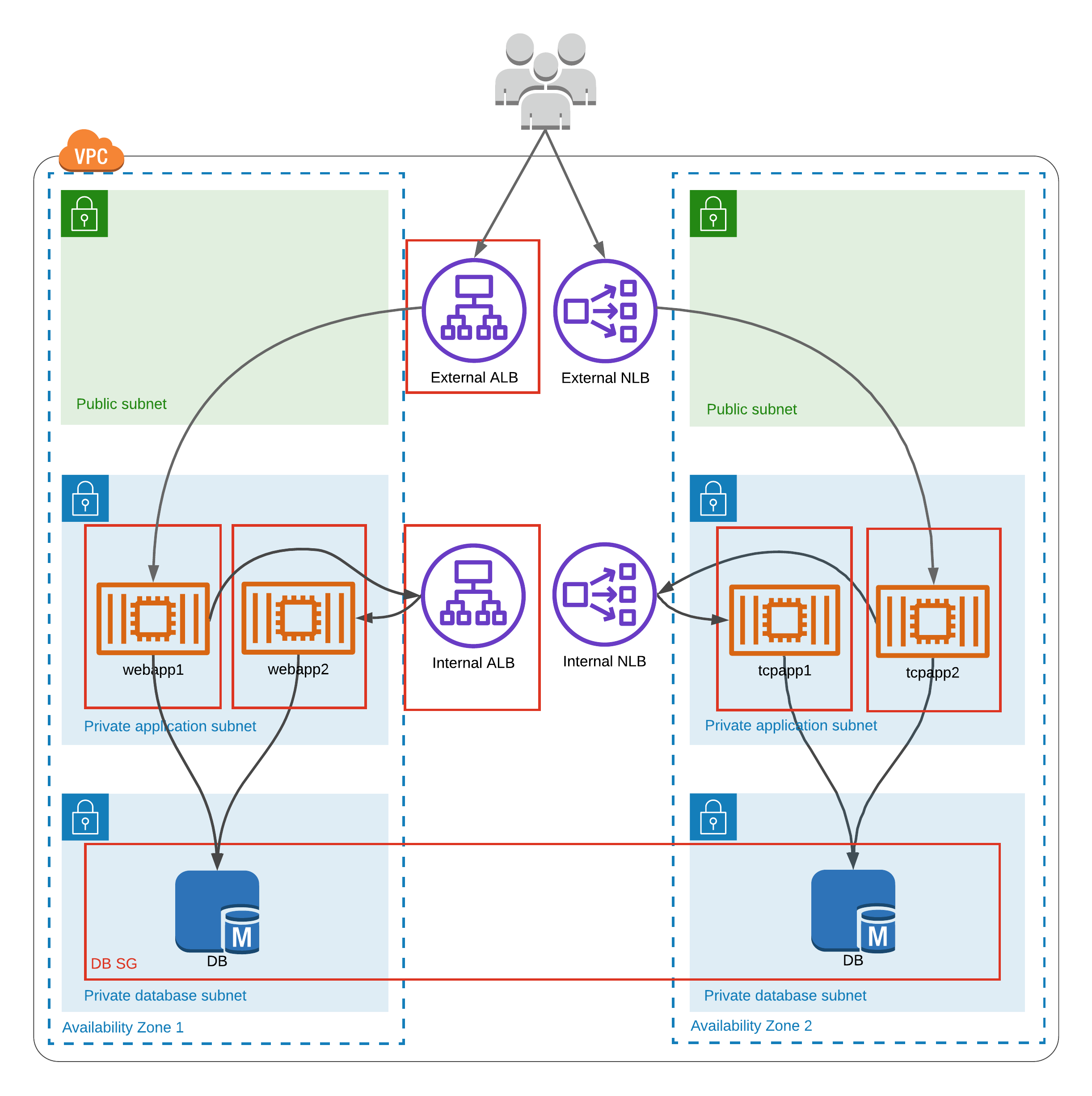

You can easily modify the Terraform modules from this article to use several load balancers for internal and external access. Let’s review the architecture diagram below:

Here, our web and L4 applications are running in private application subnets, but are registered on an external ALB and NLB, through which they become reachable from the Internet. Applications can use databases, which are provisioned in private database subnets for security reasons.

Applications can “talk” to each other using internal load balancers:

webapp1send request to https://cluster1.staging.example.com/webapp2, which corresponds to our internal ALB;- ALB processes the request and directs it to

webapp2.

Deployment Instructions

Note: I use the ca-central-1 AWS region in the pipeline code. Please change it to your desired region.

Our Fargate modules need some resources to exist for their deployments and work: VPC, subnets, ALB and NLB. If you are using AWS Fargate for your web and L4 applications, I bet you already have them provisioned in your AWS accounts. In this case please modify the modules to use your resources.

Note: All modules in this article are examples only, and are created to show the working concept. For production deployments, the code should be modified to add security and other features. For example: create and attach SSL certificates to the ALB and add Route53 records for your load balancer custom DNS names. The Terraform state should be moved to an S3 bucket to allow the team to provision resources simultaneously, etc.

Please find the deployment instructions below:

- Modify all required parameters in modules and make the necessary changes to the modules code;

- Provision

cluster1; - Provision all selected services. For example:

batchapp1,service1,tcpapp1,webapp1.

Conclusion

In this article, we discussed the solution for provisioning resources to run containerized applications in AWS Fargate. The solution can be used for different application types, such as batch, web and L4 applications, as well as services. Ways for improving modules have been proposed and the architecture has been described. Using the modules and provided code, you can create a customized solution for your own needs for real-life usage.

I hope you enjoyed this article and that you’ll find it useful for building your own solutions for running your applications in AWS Fargate.

Happy coding!

Disclaimer: Code and article content provided ‘as-is’, without any express or implied warranty. In no event will the authors be held liable for any damages arising from the use of code or article content.

You can find sample sources for building this solution here.